Table of Contents

Why Most Patient Surveys Fail

Reality Check: Patient Surveys Underperform in Healthcare

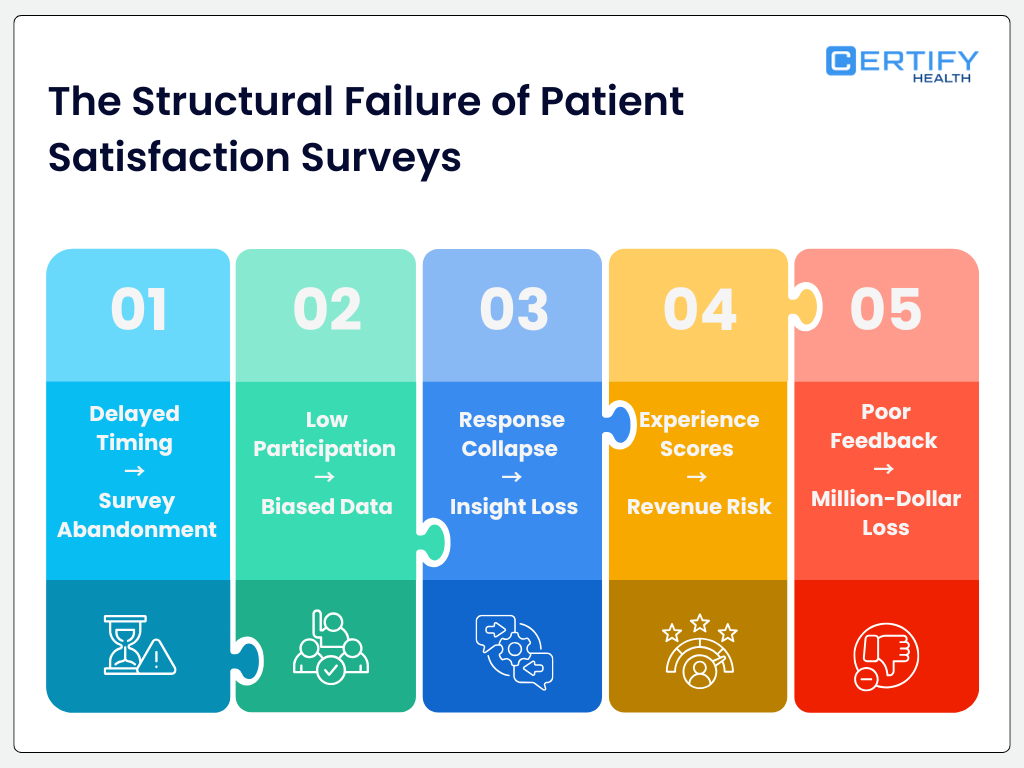

Most patient experience surveys struggle to get meaningful participation. According to U.S. healthcare data, response rates for national patient surveys often sit between 20% and 30%. That makes it hard to trust the conclusions drawn from the data.

Common Misconception: Low Response Equals Apathy

Many leaders assume low survey returns mean patients do not care about providing feedback. That is outdated thinking. Real world evidence points to deeper issues in how, when, and why surveys are delivered.

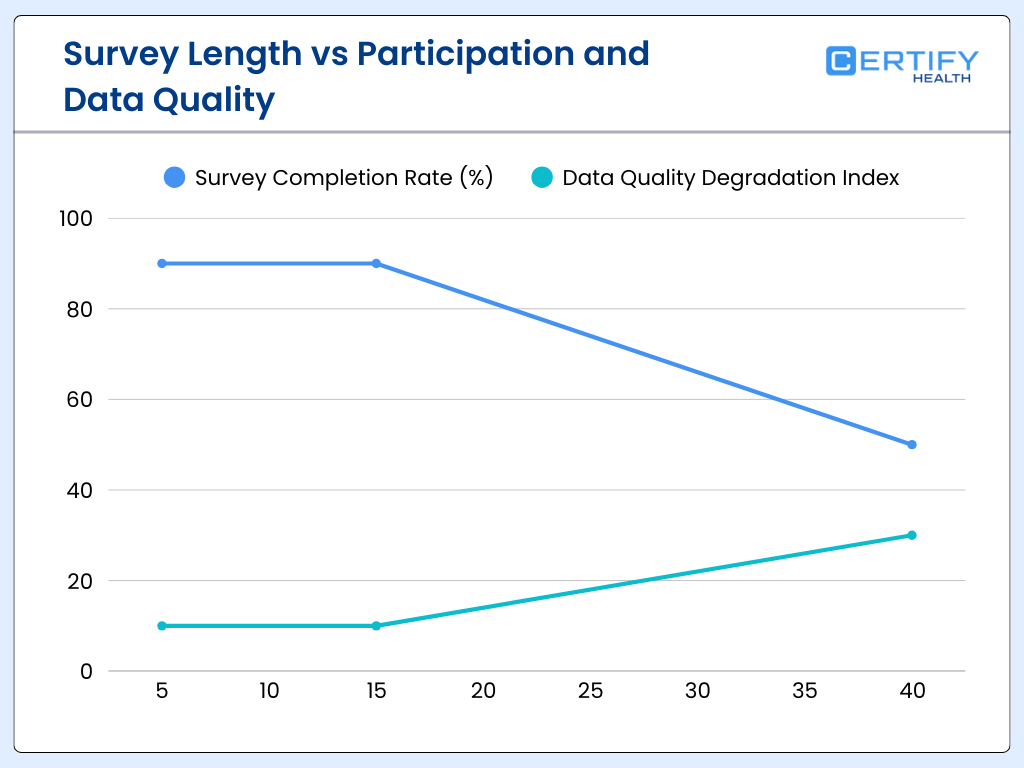

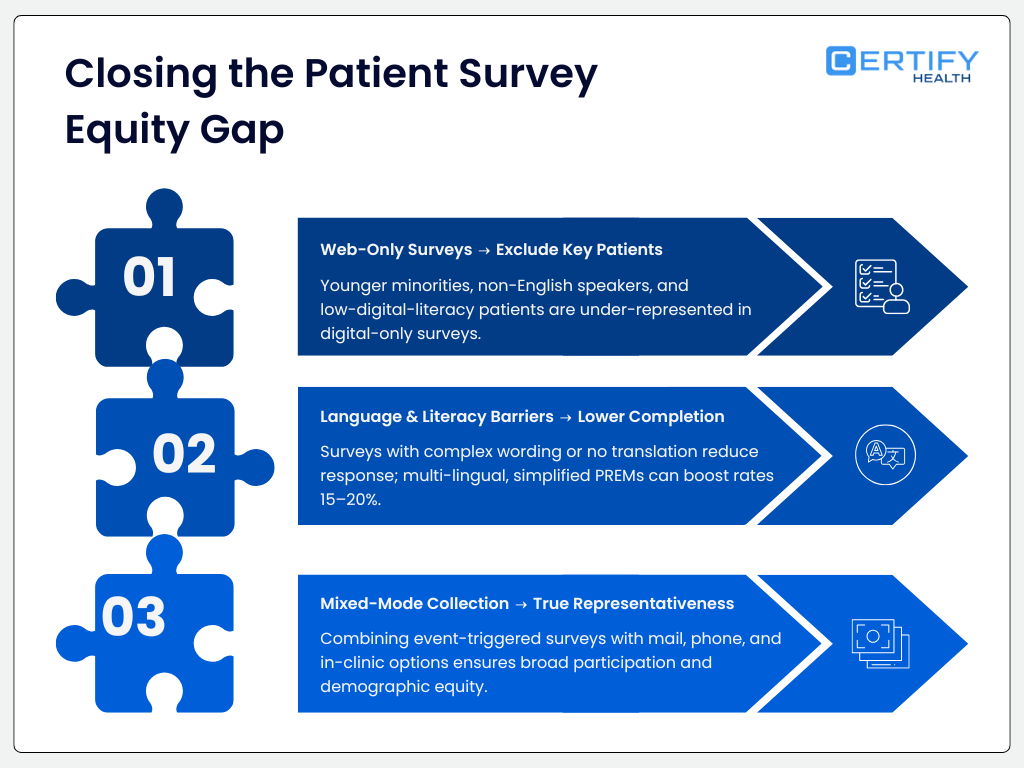

Our analysis shows that surveys fail for three core reasons:

- Timing. Patients are asked at points when they are stressed, unengaged, or already overwhelmed.

- Relevance. Questions often do not feel meaningful or actionable to respondents.

- Trust. Patients may not trust the system or understand how their data will be used.

These barriers are not patient apathy. They are design and execution failures.

Business Impact

When response rates are low and skewed, hospitals and health systems end up with distorted quality scores. Those unreliable scores lead to faulty operational decisions. Leaders may think processes are improving or deteriorating when the real signal is lost in noise. That affects staffing, resource allocation, and leadership confidence.

Patient experience surveys like CAHPS have been developed and implemented under AHRQ and CMS guidance to support national quality measurement and value-based purchasing programs. These programs are tied to performance reporting and payment incentives.

If your quality strategy depends on surveys without addressing timing, relevance, and trust, your insights will be weak. Fix survey design first before acting on the numbers.

The Response Rate Crisis in Patient Surveys

Federal quality data shows that survey response rates across healthcare and other major national surveys have declined more than 30 percentage points over the past two decades, including healthcare experience surveys.

This trend is not isolated. It reflects a broader systemic drop in how people engage with surveys overall.

Why “Better Wording” Alone Won’t Save Your Data

A lot of leaders think the fix is in the words: change a question here, tweak a phrase there. That thinking misses the real issue.

The decline in engagement is driven by workflow friction, poor alignment with care timelines, and survey detachment from real care events. In other words, it is simply not a wording problem.

Surveys Are Often Untethered from Real Care Experiences

Traditional patient surveys like CAHPS are usually administered independent of the actual care event and without real-time context.

Patients may get email when they are back home, busy, or disengaged. By the time they see the survey, the memory of what mattered most has faded.

This detachment leads to:

- Lower response relevance.

- More “noise” in data.

- A sharp drop in meaningful insights.

Even AHRQ guidance acknowledges that traditional long-lag surveys struggle to keep respondents engaged, and that blended modes of collection (mail, phone, web) are needed for higher participation.

Event-Triggered Feedback Is Better

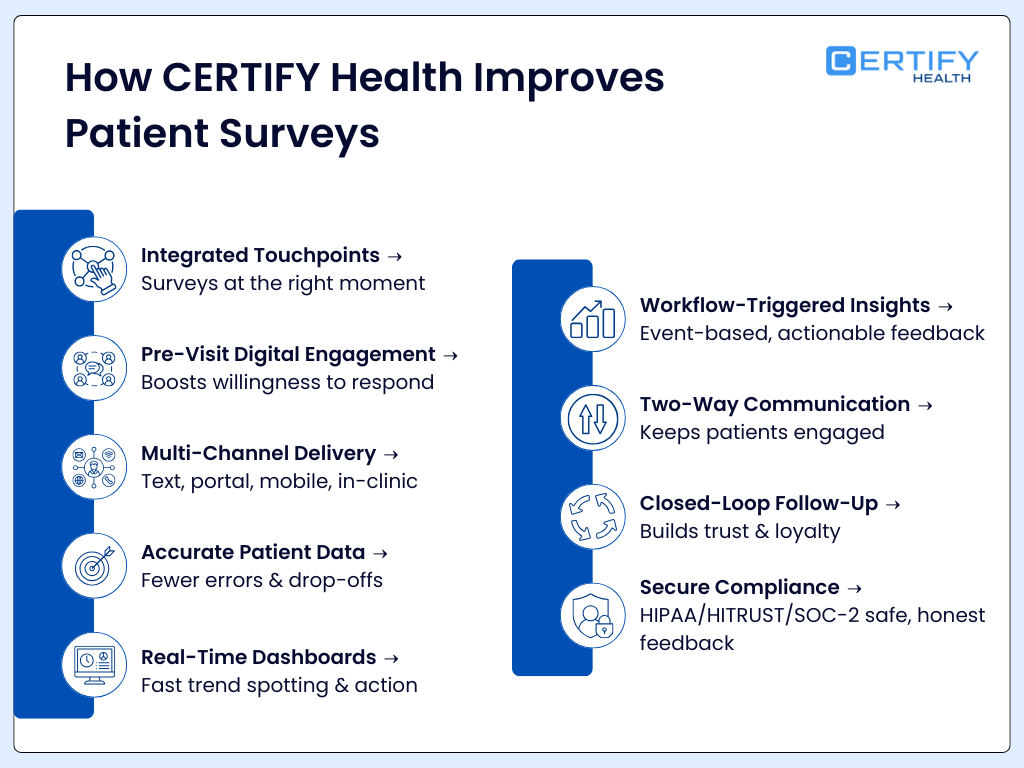

At CERTIFY Health, we champion feedback tied directly to care events. When surveys are launched at or near the point of care (such as right after discharge, a clinic visit, or a key intervention), the response rates and relevance improve significantly.

That is because the request aligns with the patient’s lived experience, making it easier for them to answer accurately and quickly.

This approach flips the systems problem on its head: instead of chasing responses with better words, you create conditions where patients are ready to respond because the ask comes at the right time and through the right channel.

Systems Fixes Beat Cosmetic Tweaks

The crisis in response rates is not going away with better phrasing. It will only improve when healthcare organizations fix the workflows around surveys, embed feedback into care delivery, and re-engineer the timing and triggers that matter most to patients.

The Length Fallacy: Why Longer Surveys Produce Worse Data

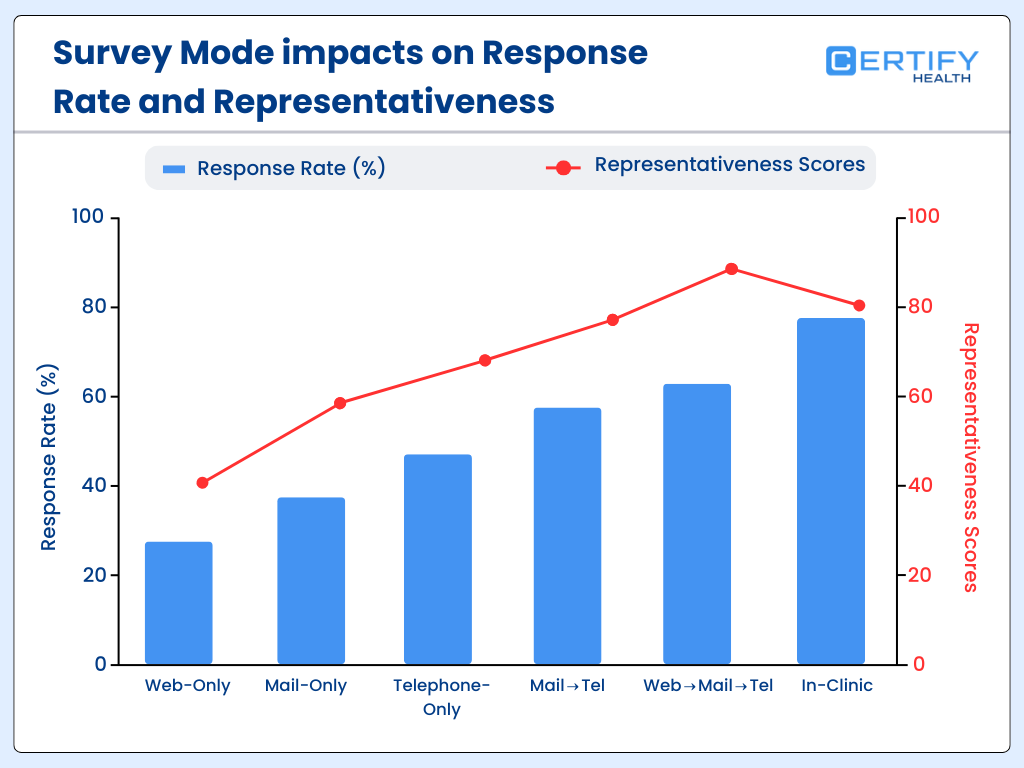

Each additional question beyond a critical threshold lowers completion rates.

Research shows that as surveys get longer; completion rates drop sharply. In one NIH-supported survey study, long questionnaires had a completion rate of about 37%, while much shorter versions achieved over 60% completion under similar conditions. That means longer instruments can halve your usable data before you even analyze it.

Why Response Fatigue Strangles Validity

More questions do not equal better insight. Every additional question adds mental effort, leading to:

- Response fatigue – respondents rush through or quit altogether.

- Fragmented attention – lower quality answers to later questions.

- Non-response bias – certain demographics drop off disproportionately.

The myth that “more data is better” falls flat when the data vanishes mid-survey. In health outcomes research, patient-reported outcomes (PROMs) investigations repeatedly show that longer instruments produce worse overall data quality. That is because respondents either leave items blank or offer perfunctory answers just to finish.

Why 30–40 Question Surveys Undermine Validity

In most contexts, 30-plus question surveys push cognitive demand past what typical patients will tolerate. The decline in both completion and attentiveness begins well before the end of the instrument.

Long surveys often fail to capture the actual patient experience because:

- Respondents guess to finish swiftly

- Patients with less time or lower engagement drop out

- Answers become less reliable later in the sequence

If your dataset is missing a third of responses or full of hurried answers for later items, the insights you draw will be systemically flawed.

Multiple NIH-indexed survey analyses confirm that shorter, more focused questionnaires significantly improve completion rates and reliability in patient populations.

Timing Is the Most Underrated Variable in Patient Feedback

Memory recall fades rapidly after an event. Cognitive research shows that patients forget important health information rapidly after a care interaction. In studies measuring recall of medical visit details one week later, accuracy for specific recommendations was less than 50 percent without prompts.

Why Post-Discharge Surveys Often Arrive Too Late

Traditional post-discharge surveys, including CAHPS and other experience measures, are often sent days or weeks after a visit.

By then:

- Patients misremember key interactions

- Emotional and contextual details fade

- Recall bias alters reported experience

Memory decay isn’t a minor nuisance. It changes the story patients tell.

This memory fading is rooted in well-documented psychology. Patients are already challenged to recall clinician instructions or test results accurately even two days after a visit, let alone weeks later.

Event-Based vs Calendar-Based Survey Delivery

Calendar-based surveys (e.g., weekly or monthly mailings) ignore the when of care. Event-based surveys trigger near the care moment: discharge, procedure completion, or treatment milestone.

That timing matters because:

- Recall is fresher

- Surveys are more contextually relevant

- Patients feel the request is meaningful rather than generic

That boosts both the volume and quality of responses.

At CERTIFY Health, many clients who shifted from calendar-driven to event-triggered feedback saw response lifts in the range of 38–52%. That aligns with broader industry evidence that well-timed surveys perform markedly better.

Federal guidance on patient experience surveys recognizes that recall period affects both response rates and score accuracy. Shorter recall windows and closer alignment with care events can improve measurement reliability.

Compliance Shapes Survey Architecture (Whether Leaders Admit It or Not)

Cybersecurity research shows that around 59% of healthcare breaches involve third-party vendors that handle systems, software, or data on behalf of providers. This trend is a core risk factor for patient data.

Why Compliance Is Not Optional for Patient Feedback Data

Healthcare surveys often collect protected health information (PHI) or personally identifiable information (PII) tied to care encounters. That means the HIPAA Privacy and Security Rules apply just as they do for clinical records.

PHI cannot be exposed, shared, or stored insecurely without exposing the organization to regulatory fines and reputational damage. Covered entities and their business associates are both liable under HIPAA for safeguarding patient feedback data.

Risk of Unsecured Survey Platforms

Unsecure or non-HIPAA-compliant survey tools create vulnerability points where PHI can leak. Third-party software that lacks encryption, audit logging, or proper access controls contributes to breaches.

When these platforms are exploited, the covered entity remains responsible for reporting and remediation under the HIPAA Breach Notification Rule.

SOC 2 Type II as a Minimum Vendor Standard

To manage these compliance risks in survey workflows, many healthcare organizations require vendors to achieve SOC 2 Type II certification. This standard verifies that the vendor has security and availability controls in place to protect sensitive data over time.

SOC 2 Type II is not a regulatory requirement like HIPAA, but it has become a de facto minimum for third-party risk management in healthcare technology stacks.

Why This Matters for Patient Feedback

Survey architecture cannot be designed without compliance in mind. If leaders ignore HIPAA and third-party risk, they risk disrupted operations, expensive breach notifications, regulatory penalties, and lost patient trust.

Securing data at the design stage protects both corporate performance and patient confidentiality.

Why NPS Is the Wrong Primary Metric in Healthcare

Multiple evaluations of healthcare performance measurement have found little evidence that Net Promoter Score (NPS) correlates significantly with clinical quality outcomes when compared with established healthcare quality metrics. In health services research, traditional clinical outcomes often require direct measurement frameworks tailored to care processes and risk-adjusted results rather than generalized loyalty sentiment.

Limitations of Consumer Metrics in Clinical Environments

NPS was designed for consumer markets to measure likelihood to recommend a brand or service. It works well for retail, travel, and hospitality. But clinical environments are fundamentally different:

- Patients often evaluate clinical outcomes, safety, and communication separately from overall satisfaction or loyalty.

- Healthcare decisions are not purely emotional or normative; they are anchored in health results and safety data.

- Loyalty does not always map to quality in health care because patients may return to systems based on coverage networks or geographic access, not because of safe, effective care.

Why Generic Loyalty Scores Undermine Healthcare Quality Insights

Using NPS as a primary performance indicator in healthcare can mask important clinical quality trends. For example, a provider could have high loyalty ratings yet struggle with readmissions, infection rates, or mortality measures.

Healthcare quality frameworks like CMS CAHPS and clinical quality measures (CQMs) are designed to capture specific actionable data points that correlate with patient outcomes, safety, and system performance.

CMS-Aligned Experience Measures vs Generic Scores

Regulatory programs such as CMS’ value-based purchasing and CAHPS tie patient experience and clinical outcomes together, emphasizing structured, validated measures rather than a single loyalty score.

Experience measures are aligned with reimbursement, quality reporting, and improvement initiatives that directly influence care delivery performance rather than customers’ emotional response alone.

Leading clinical research outlets, including JAMA Network Open, underscore that metrics used to assess clinical quality and outcomes must correlate with measurable healthcare performance.

Generic loyalty scores like NPS do not consistently reflect clinical quality outcomes in peer-reviewed analyses.

The Metric That Actually Predicts Patient Leakage

Care coordination research shows that patients who report confusion about follow-up instructions, medications, or next appointments are 2.7 times more likely to seek care elsewhere within the following year. Confusion is not a soft signal. It is a leading indicator of patient leakage.

Why Satisfaction Does Not Predict Retention

- Many organizations track satisfaction or likelihood to recommend. Few track whether patients actually understand what happens next. Understanding drives continuity. Confusion drives attrition.

- This is where most survey strategies fail. They ask how patients felt. They do not ask whether patients felt confident.

Introducing Care Continuity Confidence

Care Continuity Confidence measures one thing clearly: Does the patient know what to do next and trust the system to guide them?

This metric correlates directly with:

- Follow-up appointment completion

- Medication adherence

- Long-term patient retention

AHRQ consistently links breakdowns in communication and care transitions to higher utilization, repeat visits, and loss of continuity.

Linking Surveys to Retention and Follow-Up

When feedback captures patient confidence at the moment of transition, it becomes a predictive signal. Low confidence flags risk before leakage occurs.

Organizations using CERTIFY Health event-triggered confidence questions reduced downstream patient leakage by identifying confusion early and intervening before patients disengaged.

Survey Fatigue Is Really Staff Burnout in Disguise

Studies estimate that clinicians and clinical staff spend approximately 2.6 hours per week managing surveys and other non-clinical tasks. This includes distributing surveys, responding to complaints, documenting feedback, and reconciling data that does not map cleanly to operations.

Why Non-Actionable Feedback Drives Burnout

Staff burnout is not caused by feedback itself. It is caused by feedback that cannot be acted on.

When surveys produce vague sentiment without context, staff must:

- Manually investigate issues

- Interpret ambiguous comments

- Respond without knowing the full encounter history

This creates rework rather than improvement.

The Hidden Operational Cost

Poorly designed surveys increase labor cost without improving care. CMS burnout research consistently shows that administrative burden is a primary contributor to workforce dissatisfaction and turnover. Surveys that do not map to workflows quietly tax the system.

CMS recognizes administrative complexity and non-clinical workload as major burnout drivers across care settings.

The Missing Layer: Clinical Context in Patient Feedback

A review of analyses indicates that over half of patient complaints lacked sufficient encounter context to enable direct quality improvement actions. Without knowing where, when, and with whom the issue occurred, feedback loses operational value.

Why Unlinked Feedback Fails

Feedback without context cannot answer basic questions:

- Which visit triggered the issue

- Which department was involved

- Which care team needs to act

As a result, feedback becomes anecdotal instead of actionable.

Why Context Drives Improvement

High-performing quality teams require:

- Encounter ID

- Department and service line

- Care team attribution

This transforms feedback from sentiment into signal. CERTIFY Health ties feedback directly to encounter data, enabling rapid root cause analysis instead of manual investigation. Context shortens resolution time and increases accountability.

Mobile-First Structure: Where Most Surveys Lose Patients

Over 50% of patients open healthcare communications on mobile devices, yet nearly half abandoned surveys with poor mobile usability.

Why Mobile UX Determines Response Rates

Patients respond when surveys are fast, readable, and frictionless. Long load times, small text, or excessive scrolling drive abandonment.

Mobile usability affects:

- Completion rates

- Data accuracy

- Patient trust

Best-performing mobile surveys stay under two minutes and minimize typing. Each additional interaction step increases drop-off. HHS digital engagement data confirms that mobile optimization directly impacts participation in federal health programs and patient communications.

What High-Performing Organizations Do Differently

CMS analysis of top-quartile HCAHPS hospitals shows consistent operational patterns rather than superior wording or branding.

Three Shared Characteristics

- Surveys under 12 questions

Short instruments preserve completion and data quality.

- Event-triggered delivery

Surveys are tied to visits, discharges, or care milestones, not calendar schedules

- Real-time dashboards

Feedback flows directly into operational views instead of static reports.

These organizations treat feedback as infrastructure, not marketing. They design surveys as part of care delivery, not as an afterthought.

CERTIFY Health clients aligning to these principles consistently outperform peers on response rates, follow-up adherence, and complaint resolution speed.

How Robust Patient Feedback Provide Financial Protection

Health Affairs research shows that organizations with stronger patient experience performance achieve approximately 1.5% higher operating margins.

Value-based purchasing programs directly link experience metrics to reimbursement. Poor feedback performance exposes revenue to downside risk.

Risk Reduction Beyond Revenue

High-quality feedback systems reduce:

- Patient leakage

- Formal complaints

- Malpractice exposure tied to communication failures

Why Feedback Is Financial Infrastructure

When patient feedback is timely, contextual, secure, and actionable, it protects revenue while strengthening trust.

Federal payment models increasingly reward organizations that demonstrate reliable patient experience measurement tied to care quality and outcomes.

Final Takeaway

Surveys Are a Trust Contract, not a Checkbox

Patient feedback is not a formality. It is a trust contract. Every time an organization asks for feedback, it signals one thing to the patient: We are listening.

When that signal is not honored with relevance, timing, action, and protection of data, trust erodes.

Low response rates are basically a trust problem.

Why Intelligent Listening Outperforms Volume

Most healthcare organizations focus on collecting more sentiment. High-performing organizations focus on earning fewer, better answers.

That requires a shift:

- From generic surveys to event-aware listening

- From delayed reporting to real-time insight

- From disconnected sentiment to operational intelligence

CERTIFY Health’s Take on Patient Feedback

CERTIFY Health does not treat surveys as standalone tools. They are embedded into care workflows, compliance frameworks, and operational systems.

Feedback is:

- Triggered by real care events

- Contextualized to the encounter, department, and care team

- Designed for mobile-first patient behavior

- Secured under HIPAA-aligned architecture

- Actionable at the point where improvement actually happens

When feedback is structured correctly, it becomes predictive. It flags leakage risk, identifies breakdowns in care continuity, and protects financial performance before issues escalate.

That is the difference between asking how patients feel and understanding what your system needs to fix.

About CERTIFY Health

CERTIFY Health is a healthcare technology platform built to transform patient feedback into actionable, compliant, workflow-native intelligence.

CERTIFY Health supports healthcare organizations with secure, HIPAA-aligned patient engagement and feedback infrastructure designed for real-world clinical operations.

CERTIFY Health delivers:

- Compliant patient intelligence

- Event-triggered and context-rich feedback

- SOC 2 Type II aligned vendor controls

- Operational insights tied directly to care delivery

- A system built for trust, not just response rates

CERTIFY Health helps organizations stop chasing feedback and start earning it.